Key takeaways from Biden’s massive executive order on artificial intelligence

It’s going to take time for tech leaders in both government and private sectors to figure out all the implications of President Joe Biden’s sweeping new executive order regulating artificial intelligence. Since its Oct. 30 release, the 63-page executive order has triggered a whole-government avalanche of agency policy briefings, inquiry launches and press statements. The world’s tech titans have likewise exploded into a flurry of activity, from individual social platform rants to joint statements to the United Nations. Digital rights advocates, meanwhile, are leery of the order’s lack of protection against government surveillance tech.

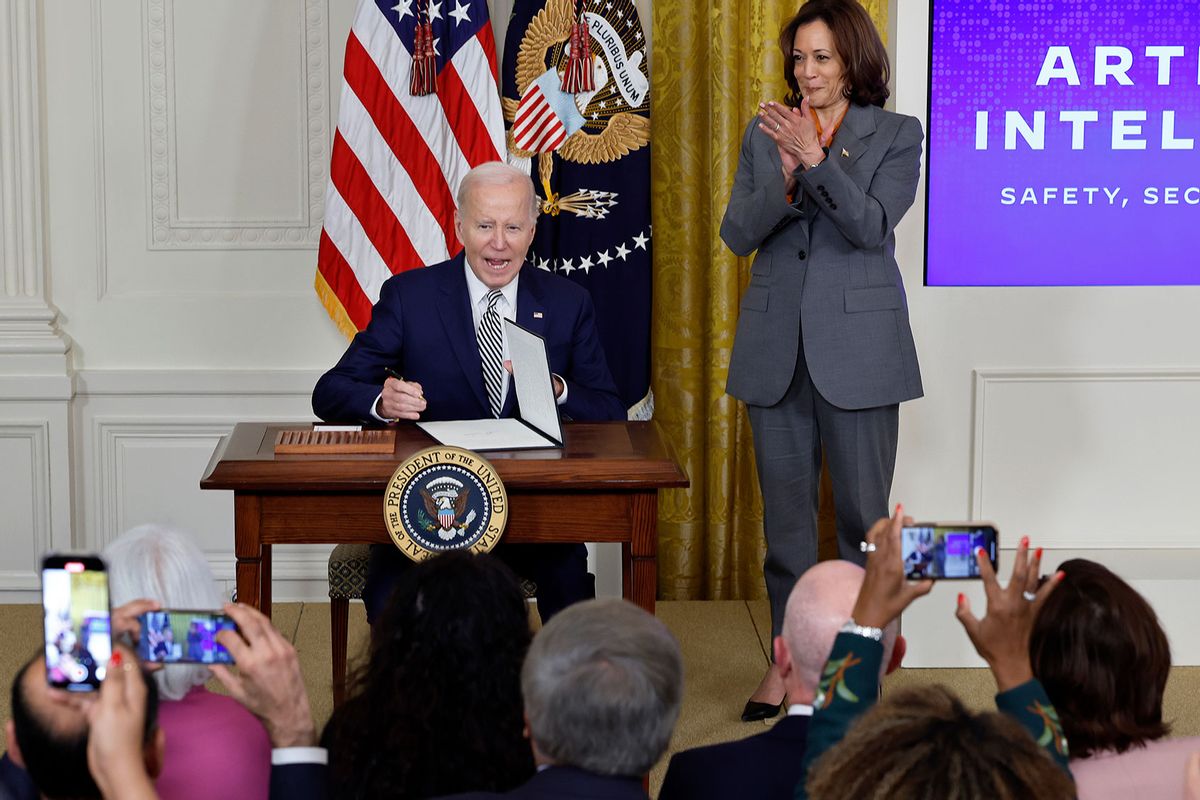

“AI is all around us,” Biden said while signing the order. “To realize the promise of AI and avoid the risk, we need to govern this technology.”

Congress still hasn’t moved on AI regulation but the Biden administration has been pushing ahead. The most recent order is the administration’s capstone effort after a year of AI-driven controversy in the capital, which started with a series of sobering Congressional hearings on the topic in May. After leading AI firms offered voluntary commitments to the White House on their use of AI in July, the Biden administration rolled out its blueprint for an AI Bill of Rights. The National Institute of Standards and Technology (NIST) then released its AI Risk Management Framework.

Agencies will have between 90 and 365 days to comply with the new order — with different deadlines per agency. Here are the main takeaways from the sprawling executive order, and what we know so far.

National security: DHS takes the wheel

The order directs the National Security Council to create rules for the military’s use of AI. But among the alphabet-soup of federal agencies now mobilized toward new AI policy rollouts, none seem to be granted wider authorizations than the Department of Homeland Security (DHS).

“The President has asked the Department of Homeland Security to play a critical role in ensuring that AI is used safely and securely,” DHS Secretary Alejandro Mayorkas said in a statement.

“There remain substantial concerns about the department’s surveillance activities. Perhaps the Department will have to improve its own record before it can reliably lead others on AI governance and safety.”

The DHS’ mandate in the executive order is vast and still vague, but it gives the agency charge over AI development in some of the most powerful areas of government. DHS will create a board to work with the Defense Department on possible threats to cybersecurity threats to critical infrastructure. DHS will also be in charge of addressing any potential for AI to create chemical, biological, radiological and nuclear threats.

“The AI Safety and Security Advisory Board, which I look forward to chairing, will bring together industry experts, leading academics and government leaders to help guide the responsible development and safe deployment of AI,” said Mayorkas, adding that his 2023 AI Task Force has previously “developed guidance on the acquisition and use of AI technology and the responsible use of face recognition technologies.”

Noting the DHS’ poor track record of data handling, Tech Policy Press CEO Justin Hendrix said the broad mandate may be cause for concern.

“A new Brennan Center report considers its use of automated systems for immigration and customs enforcement, finding a lack of transparency that is in tension with the principles set out in the White House Blueprint for an AI Bill of Rights. Earlier this year, reports emerged about an app using facial recognition deployed by DHS that failed for Black users,” Hendrix wrote.

“There remain substantial concerns about the department’s surveillance activities. Perhaps the Department will have to improve its own record before it can reliably lead others on AI governance and safety,” he said.

Renika Moore, the ACLU’s Racial Justice Program Director, attended the order’s signing. She said that while she is encouraged by the Biden administration’s whole-of-government approach, the order “kicks the can down the road” on protecting people from law enforcement’s use of AI tech.

Want more health and science stories in your inbox? Subscribe to Salon’s weekly newsletter Lab Notes.

The ACLU’s Cody Venzke, a senior policy counsel, also pointed to the government’s own unchecked use of AI-driven surveillance.

“The order raises significant red flags as it fails to provide protections from AI in law enforcement, like the role of facial recognition technology in fueling false arrests, and in national security, including in surveillance and immigration,” Venzke said in a statement. “Critical work remains to be done in other areas, including addressing the government’s purchase of our personal information from data brokers.”

Immigration: New paths possible

Some parts of the order could prove promising for Democrats, however. The order aims to roll out a long-overdue update to immigration laws, opening a potential citizenship path for noncitizen AI experts and STEM students, as Biden seeks to bolster the US’ thin tech ranks.

“Unless Congress acts fast to infuse more visas in the legal immigration system, the changes in the executive order may prove to be only window dressing.”

“This change, if it were to go into effect, would be a significant change that would allow U.S. employers to sponsor noncitizen AI professionals for permanent residence without going through the burdensome labor certification process. The list of Schedule A occupations has not changed for decades, so it is high time that the list be expanded even beyond AI occupations to include others for which there are a shortage of U.S. workers, such as other computer occupations,” wrote immigration experts Cyrus Mehta and Kaitlyn Box.

“However, the executive order itself in many instances merely directs the relevant agencies to ‘consider initiating a rulemaking’ to implement the changes… Unless Congress acts fast to infuse more visas in the legal immigration system, the changes in the executive order may prove to be only window dressing,” the two said in an article distributed by the LexisNexis Immigration Blog.

Notably, LexisNexis secured a $22.1 million government contract to circumvent state sanctuary laws by tracking down and surveilling immigrants on behalf of Immigration, Customs and Enforcement (ICE). Echoing Hendrix’ concern over DHS data-handling, ICE’s immigrant detention numbers have grown in recent weeks but no one is currently certain how many people are locked in its custody since the agency routinely defies Congressional orders to publish its numbers.

Digital rights and consumer privacy

Although government contractors like LexisNexis may or may not be exempt from the specifics of the order, other government contractors that pool Americans’ data may have to re-evaluate any potential use of AI.

Legal intelligence firm JD Supra weighed in on the shifting landscape that private companies would be facing while pursuing government contracts.

“The executive order requires implementation in the form of agency-issued guidance and potentially legislation to effectuate some of its more ambitious aspects. Given the executive order’s tight deadlines, in the coming months we expect to see new agency-level AI policies, as well as requests for information and requests for comments on proposed rules,” the firm wrote.

The National Institute of Standards and Technology (NIST) has already begun work on building the framework for those new agency-level policies. It has also rolled out a new AI Safety Institute Consortium.

“This notice is the initial step for NIST in collaborating with non-profit organizations, universities, other government agencies, and technology companies to address challenges associated with the development and deployment of AI,” the institute wrote in its Nov. 2 document.

Initial reactions from privacy advocates are cautiously optimistic — but not without skepticism. The non-profit Future of Privacy Forum called the Biden plan “incredibly comprehensive” and echoed its call for bipartisan privacy legislation.

“Although the executive order focuses on the government’s use of AI, the influence on the private sector will be profound due to the extensive requirements for government vendors, worker surveillance, education and housing priorities, the development of standards to conduct risk assessments and mitigate bias, the investments in privacy enhancing technologies, and more,” the group said in a statement.

The Electronic Frontier Foundation was among several civil rights groups that advocated for Congress to act on such protections in an October letter to Congress.

Following the order’s release, EFF analyst Karen Gullo said she was glad to see anti-discrimination provisions and clauses aimed at “strengthening privacy-preserving technologies and cryptographic tools.” But, she said in a recent interview with the AARP, the executive order is “full of ‘guidance’ and ‘best practices,’ so only time will tell how it’s implemented.”

Alexandra Reeve Givens, president of the Center for Democracy & Technology, saw the usefulness of the order in addressing ongoing AI problems in workplace, housing, and education issues.

Startups: Register computers with the government?

The largest flashpoint in the debate around this order has centered on the new requirements it creates for companies that are developing large-scale AI models — in particular, any models that could be capable of posing a threat to national security. Those companies will have to disclose the results of their safety tests to the government. However, no one yet knows how to determine what could be a threat.

In the meantime, any companies training large language learning models using computing power above a particular threshold (100 million billion billion operations — a threshold which has never been met) will have to report their plans to the government.

“The order’s focus on computing power thresholds and potential restrictions on fine-tuning and open-source models raises concerns about the impact on smaller entities and the broader AI community,” writes John Palmer for Cryptopolitan. “While there are elements of the order that could be beneficial for innovation and talent in the AI industry, the potential for regulatory capture and the centralization of AI power remain significant concerns.”

Well-known venture capitalists in tech like Bill Gurley are echoing this caution against regulatory capture, noting the security implications behind greater calls for transparency in AI development. Gurley thinks that more protection of open source AI models is required, not less, if politicians are concerned over AI’s ability to impact voting decisions and manipulate truth.

“If you believe that, wouldn’t you be more comfortable with an open source model where academics can see all the code, what’s happening, and what data sources are in this thing versus a proprietary system where you have no visibility?,” he told Barrons’ Tae Kim.

Big names in the startup world aren’t the only ones afraid to see AI power centralized, though. Meta’s Llama-2 and Mistral AI’s Mistral 7B could both stand to lose footing. Yann LeCun, chief AI scientist at Meta, tweeted that he is kept up at night by what could happen “if open source AI is regulated out of existence.”

“A small number of companies from the West Coast of the US and China will control AI platforms and hence control people’s entire digital diet. What does that mean for democracy? What does that mean for cultural diversity?” Yann said.

Read more

about artificial intelligence