Artificial intelligence could lead to extinction, experts warn

Artificial intelligence could lead to the extinction of humanity, experts – including the heads of OpenAI and Google Deepmind – have warned

Dozens have supported a statement published on the webpage of the Centre for AI Safety.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war” it reads.

But others say the fears are overblown.

Sam Altman, chief executive of ChatGPT-maker OpenAI, Demis Hassabis, chief executive of Google DeepMind and Dario Amodei of Anthropic have all supported the statement.

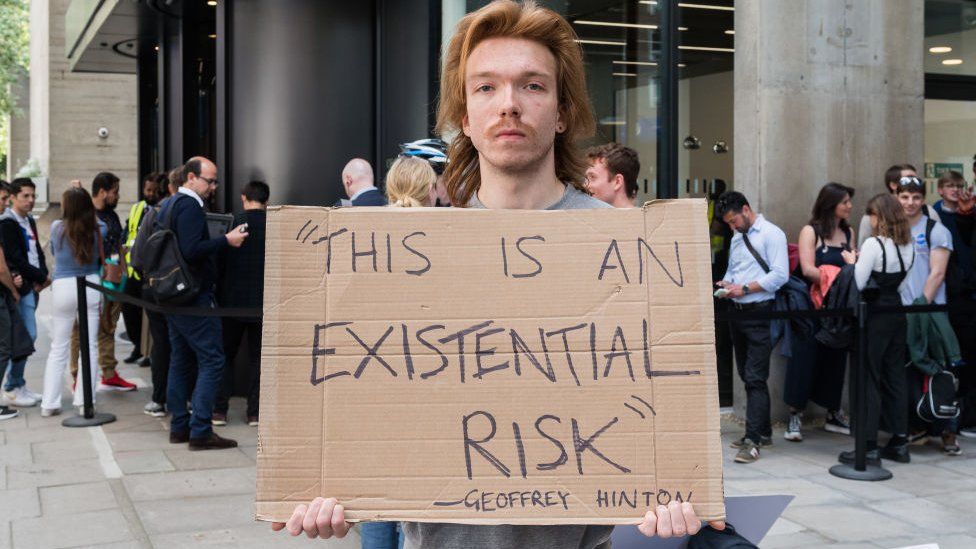

Dr Geoffrey Hinton, who issued an earlier warning about risks from super-intelligent AI, has also supported the call.

Yoshua Bengio, professor of computer science at the University of Montreal, also signed.

What are your questions about artificial intelligence?

- Email: haveyoursay@bbc.co.uk

- WhatsApp: +44 7756 165803

- Tweet: @BBC_HaveYourSay

- Please read our terms & conditions and privacy policy

Dr Hinton, Prof Bengio and NYU Professor Yann LeCunn are often described as the “godfathers of AI” for their groundbreaking work in the field – for which they jointly won the 2018 Turing Award, which recognises outstanding contributions in computer science.

But Prof LeCunn, who also works at Meta, has said these apocalyptic warnings are overblown.

The BBC is not responsible for the content of external sites.

Allow Twitter content?

This article contains content provided by Twitter. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read Twitter’s cookie policy and privacy policy before accepting. To view this content choose ‘accept and continue’.

The BBC is not responsible for the content of external sites.

Many other experts similarly believe that fears of AI wiping out humanity are unrealistic, and a distraction from issues such as bias in systems that are already a problem.

Arvind Narayanan, a computer scientist at Princeton University, has previously told the BBC that sci-fi-like disaster scenarios are unrealistic: “Current AI is nowhere near capable enough for these risks to materialise. As a result, it’s distracted attention away from the near-term harms of AI”.

Pause urged

Media coverage of the supposed “existential” threat from AI has snowballed since March 2023 when experts, including Tesla boss Elon Musk, signed an open letter urging a halt to the development of the next generation of AI technology.

That letter asked if we should “develop non-human minds that might eventually outnumber, outsmart, obsolete and replace us”.

In contrast, the new campaign has a very short statement, designed to “open up discussion”.